In addition to Stage 1 of this app, Stage 2 is a voice interaction app based on locally trained natural language processing model by using Nlp.js, which detects and analyze phrases based on machine learning, and voice recognition by using ibm waston cloud. Due to compatibility, limitation in testing, privacy issue, the published version only contain chat feature using which user is able to add items to their lists. Chat robot will communicate with user in multiple rounds and extract related information to add to the existed lists. This app is built on Expo managed workflow and thus has cross-platform support. The future published version will integrate Tensorflow and using float32array to run locally train and run voice recognition. Dark and light theme is following user’s system default. This app is done solely on me. More details come below:

Usage

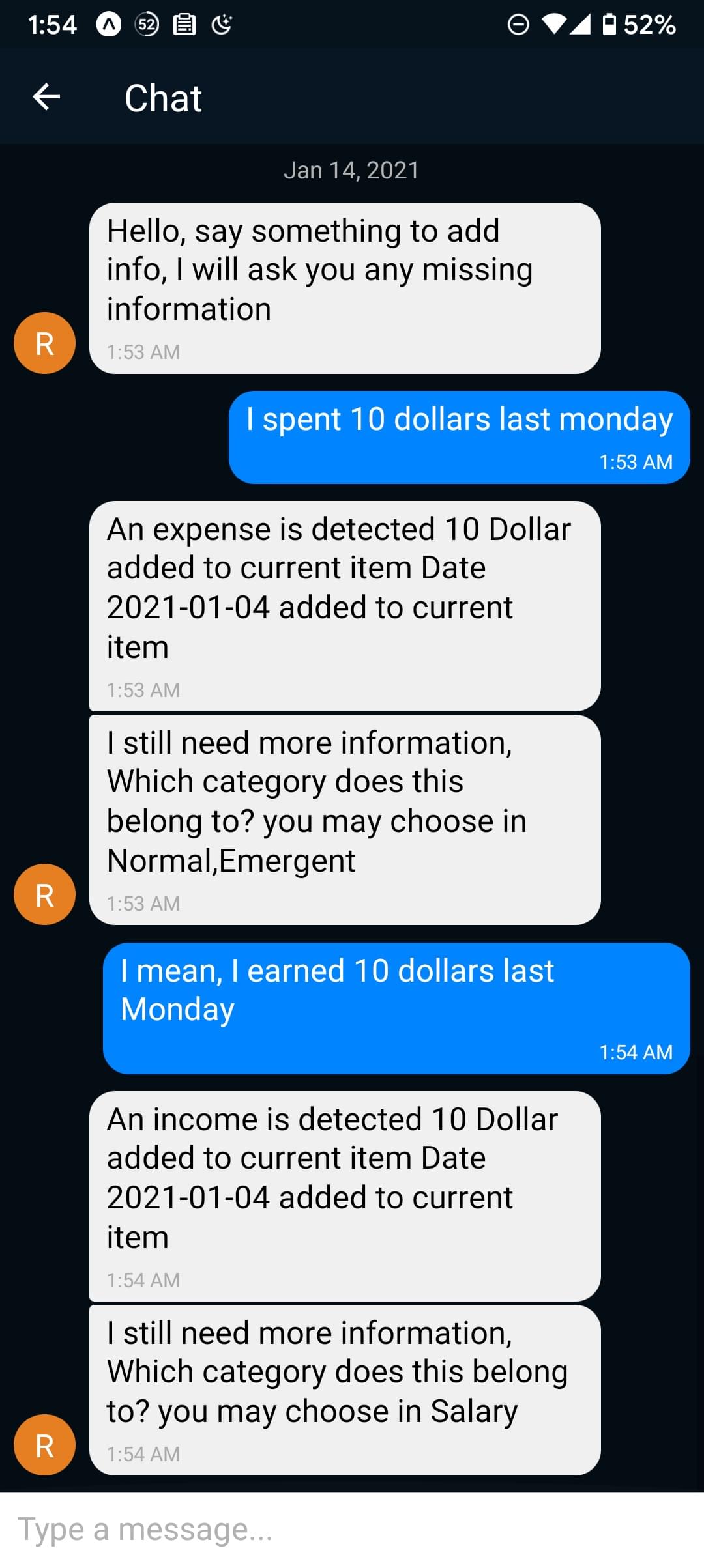

In addition to Stage 1 of this app, just click add float button and select the mic sign. After waiting a moment, the interface will be poped out. See an example of the released app at the end of this passage.

Running

Click here for source code.

Click here for an expo runable version

Android and ios package is ready but Github has the limitation to prevent me of uploading. User could always built their own through expo build:ios or expo build:android -t apk

Compile from source

To compile and run locally:

1 | git clone https://github.com/ShaokangJiang/CapitalTwo-Financial.git |

And use our phone to scan the QR code on screen.

Entity extraction

Details about using entity and entity extraction steps could refer to Nlp.js in Expo usage, which contains more details, including sample codes.

Entity information, like amount, title, etc, are extracted from the communication between you and the machine. The description will be the chat between you and robot. Because of the benefit of locally trained model, the specific spending and expense categories are added as enum and thus able to be extracted as well. User just need to answer questions machine asked, and everything would be on set. Detailed syntax could check the repo at here

Future version might contain store name extraction based on context. To do this, current plan is to check the sentence structure, like detecting “at XXX”, and double check by using the intent matching through Nlp.js. Then extract them using regular expression.

Future work

- Optimize the performance - including update the state only necessary, retrain the model only when necessary.

- Use locale voice recognition that will be compatible with Expo platform through Tensorflow local training to make the version works on both android and ios with no privacy risk. Some possible projects using is the speech-command. And I will possibly build my own training dataset for each specific function. Current plan for this one is to convert original audio to

float32arrayand then using thee locally trained Tensorflow model to detect and generate result. - Location recognition in the conversation based on the context. Possible way is through checking the sentence structure, like detecting “at XXX”, and double check by using the intent matching through Nlp.js. Then extract them using regular expression.

Sample running

Here is a sample running of the released version. This is in dark theme.